Thomas Whelan, along with a team from the Dyson Robotics Laboratory at London’s Imperial College, has devised a way to add much more realistic lighting effects to augmented reality. They call it Elastic Fusion.

I’ll give you a super simple explanation of this breakthrough, a slightly more complex explanation, and a video. If you want the much more complex version (and it gets much more complex), feel free to dig into the paper here. If you have the technical chops, it’s worth your time.

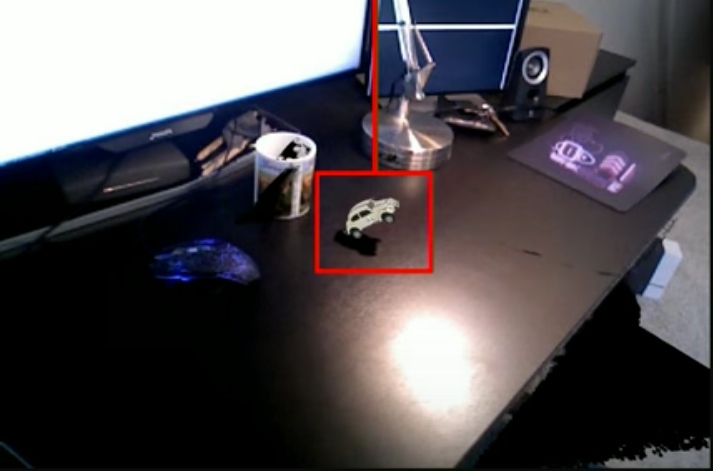

The super simple version: Elastic Fusion helps augmented reality devices project digital information with lighting effects so it looks more realistic in its environment. If the AR device projects a little digital car on the desk, and there’s a physical light behind that digital car, Elastic Fusion will make sure the car has a realistic shadow.

The simple version: The algorithm Elastic Fusion uses for constructing a 3D model is capable detecting multiple light sources in its environment. When the team uses the algorithm and a 3D scanner to gather information for projecting an augmented reality representation, it will know where these lights are and include them in its model information. This model information is used to provide more realistic lighting effects for augmented reality representations.

Much more complex version: Here’s Whelan’s paper. You’re on your own.

Here’s a video. It’s worth watching in full, but the really amazing part starts around the 3:32 mark. Enjoy!