Update 2/15: Received updated information from Matterport, have changed the “limitations” section to a “speed of capture” section that includes information about the use of a 360° camera without a tripod.

In the past few years, Matterport has been busy. Most notably, the company brought its Pro-series 3D capture device (and the processing workflow that goes with it) from the real estate market into the AEC market, and then partnered with Leica Geosystems to fully integrate data from the popular BLK360 lidar scanner into the Matterport workflow. This year, the company keeps pushing for even more new markets with the release of its new platform, Cloud 3.0.

With this release, Matterport now offers users the ability to upload 360° photos and turn them into 3D models. By enabling the use of sub-$400 device for 3D capture, Cloud 3.0 brings an extremely affordable option into the Matterport portfolio. But the real story is in how Matterport processes this data. Tomer Poran, senior business development manager at Matterport, explains that this functionality exploits the company’s Cortex technology, which is an AI-powered, computer-vision processor that infers 3D models from the 360° data. (Read: this solution is not taking the 360° images and generating a model with photogrammetry.)

To find out more, I caught up with Poran on the phone.

What are the specs?

First, we discussed the question you all want answered. What’s the quality of a 3D scan that a machine infers from 2D data?

Poran says the resolution of this 3D “capture” is dependent on the 360° camera you use. Naturally, a 3D capture generated from a Ricoh Theta is not going to offer the same resolution as one from a BLK360, or even a Matterport Pro. Similarly, don’t expect survey-grade accuracy, since Poran tells me that the 3D models have what Matterport has termed “an 8% max error.”

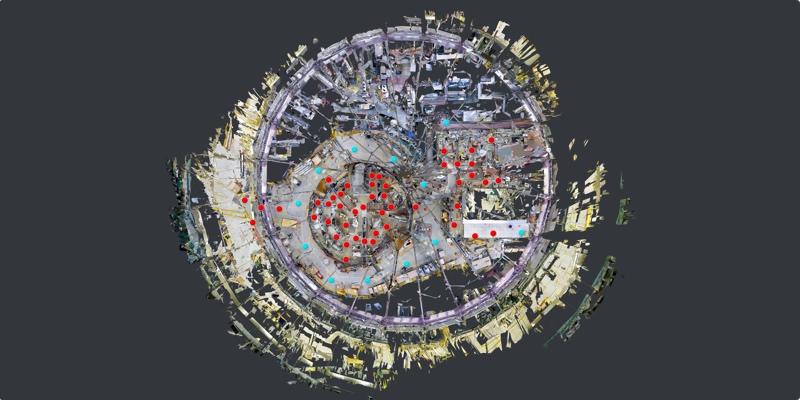

To see a Cortex-generated model in action, click here.

A screenshot of a Matterport Cortex model.

Who is it for?

He readily acknowledges that this puts the system at the low end of the accuracy spectrum. However, if you know Matterport’s Pro 2, you also know that the company doesn’t see this specification as a stumbling block. Poran emphasizes that the system offers a very low price point, a quick workflow, and results that are more than accurate enough for visualization applications and very basic measurement needs.

That means it’s still good enough for a lot of applications. It could work in real estate, home inspection, and the growing property insurance market where most users have never seen a terrestrial 3D scanner. But it could also find fans in AEC and FM, where firms already own a number of 360° cameras and 360° tour applications have already gained a significant foothold.

In fact, Poran says that the new Cortex-enabled workflow is something of a response to the demand from customers in the AEC space. Many of these users wanted to create automatic 360° tours of a space using the Matterport user interface and user experience, but didn’t want to lay out the funds for a Matterport Pro series scanner just to capture some visualizations. Now they won’t have to.

Matterport is hopeful that the workflow’s specs hit a sweet spot to “open up even more use cases for 3D” that haven’t been possible with previous 3D tools. Beyond offering industries that already use 3D capture an even lower-cost method for getting their data, it could find users among homeowners who want a quick capture of their space for applications like interior design or insurance documentation.

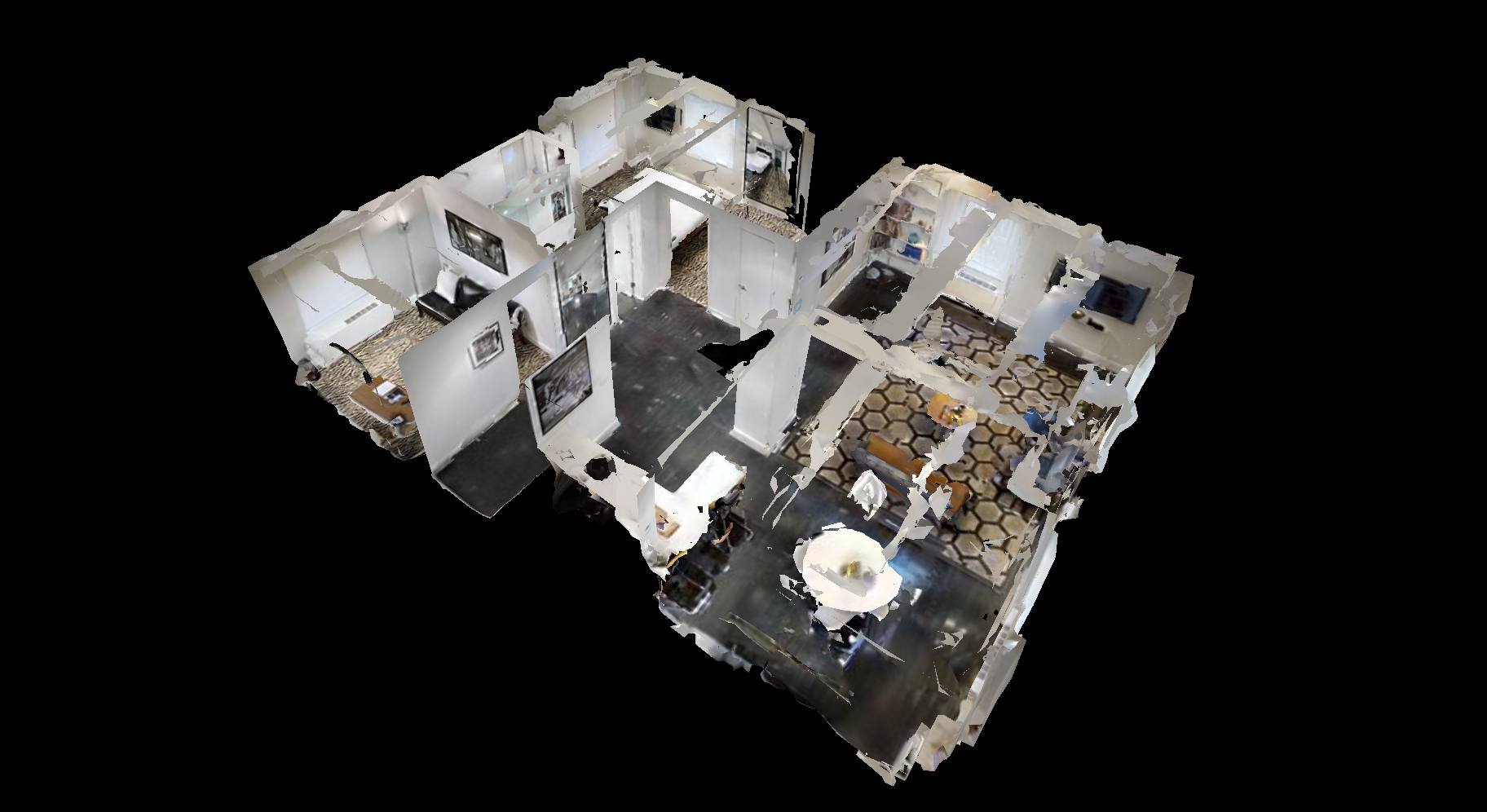

A Matterport capture aligned with FARO lidar scans. Credit: Paul Tice, ToPa 3D.

How fast can you capture with Cortex?

Matterport knows that there’s still one parameter that it needs to improve to capture the 3D visualization market for AEC and FM: speed.

Poran says getting the best quality results still requires using a tripod, and still requires standing out of the way when the picture is snapped. This allows Cortex to scan at about 7,000-8,000 square feet per hour.

Users are able to scan without a tripod if they prefer. This will increase your speed to about 10,000 – 20,000 square feet per hour–a great speed for documenting large facilities and projects–but results in a lower quality visualization and the presence of the operator in the image. Matterport recommends the following non-tripod methods, in order from least blur to most: a mounting stick, sticking it on your hardhat, or holding the device in your hand.

When I asked if these alternate methods would affect the max error number he quoted me earlier, Poran said, “we’ll only know at the end of the beta, but I don’t expect it will. The camera can take up to a 17% angle (!) without impacting alignment, so shouldn’t matter.” However, he was careful to note that there is “not enough testing to know for sure.” Remember, this is a beta product, so we’re discussing an early incarnation of the technology.

How does Cortex work?

Matterport used a machine-learning approach to give Cortex its 3D powers.

More specifically, the company used deep learning. This methodology involves taking a neural network (a computer system designed to work like the human brain) and teaching it to perform a task by feeding it lots of examples. If you want to teach a neural net to recognize kinds of food, for instance, you load it up with lots of labeled images of hot dogs, lettuce, pie, and so on. The more labeled pictures you feed it, the better it gets at recognizing what kind of food is in the picture.

As is true of a lot of companies moving into the machine learning and deep learning space, Matterport has a metric ton of data to feed a neural net: To date, the Pro 2 device has captured color images and depth maps for about a million and a half homes around the world. Poran says that each of these data sets ”consists of an average of 60 scans,” and that “each scan consists of about 127 individual photographs.” Some quick napkin math shows that, well, Matterport has a ton of images that include both RGB and depth data.

Matterport fed that data to a neural network and trained it to recognize how the RGB data for an object relates to that object’s depth measurements. As a result of this training process, Cortex got surprisingly good at taking 2D images of a space and inferring what it looks like in 3D, with a “max error” of 8%.

The company also has more training data coming in regularly—Poran says the platform is adding 100,000 scans a month. That means Cortex will quietly improve behind the scenes as time goes on.

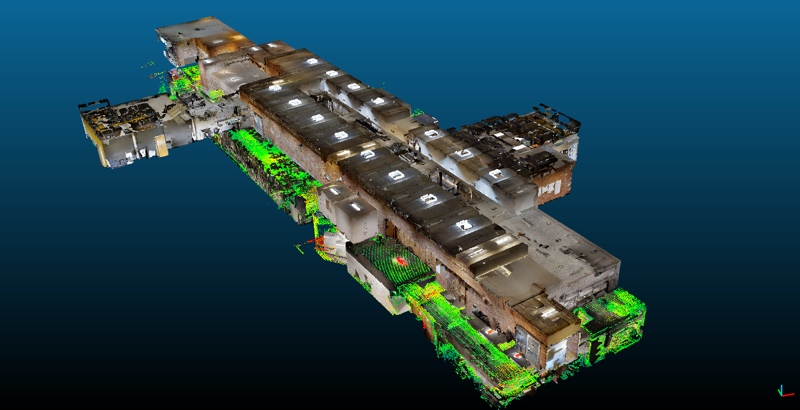

Matterport’s Pro2 device

A new kind of 3D tool?

It’s tempting to call this a whole new category of 3D capture, what we might term AI-assisted, computer-vision 3D capture. But here the term “capture” seems a bit off. That’s because the system is not actually capturing 3D data per se, but rather looking at 2D 360° data and extrapolating an impressively accurate 3D model from that information.

Put another way, it’s taking data from a relatively low-quality sensor and using computational muscle to turn that data into something richer and more complex. Sound familiar? It should, because it’s reminiscent of what Apple and Google are doing with their smartphone cameras. These companies take images from their tiny smartphone image sensors and process them using computer vision and AI technologies, enabling them to produce much, much richer and more detailed images than should be possible–at least according to the laws of physics.

This technology is called computational photography. So is it possible that Matterport’s Cortex is a sign that we’re entering an era of computational 3D sensing?

For more information about Matterport’s technology offerings, check the company’s website and get in touch with them directly.