Urban Robotics announces its presence with “dense 3D extraction technology”

PORTLAND, Ore. – After more than eight years developing technology that has been used, thus far, exclusively by the Department of Defense, Urban Robotics is beginning to explore opportunities in the commercial marketplace for its “dense 3D extraction technology,” which uses cloud and cluster computing, along with specially developed algorithms, to create massive colorized point clouds from digital photographs in rapid fashion.

With the DoD, “the main focus has been on aerial imaging,” said Geoff Peters, the copmany’s CEO, “and to do it in a very fast turnaround with massive datasets … We’re dealing with tens of thousands of images and we turn around the product in 24 hours, and that includes the collections.”

The company was founded by computer vision engineers out of Intel, primarily, and they have worked on taking 2D and orthographic data sets and repositioning every single pixel in 3D space. But it’s also the speed with which the company can do this that Peters thinks sets the company apart: “If we’re not done by the end of the 24-hour period, then we’re not successful.”

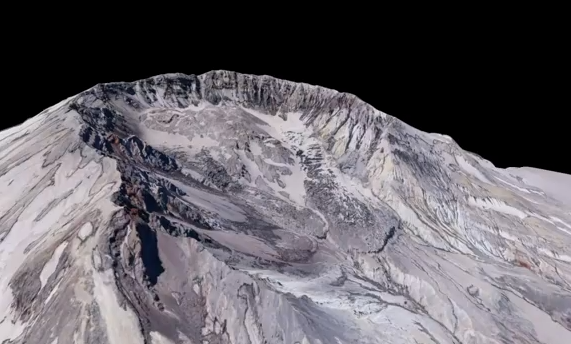

You can view a demonstration of the company’s capabilities, working from aerial photos of Mt. St. Helens, in the following video:

So, how do these point clouds compare to lidar data? “From a dataset perspective,” said Peters, “it looks exactly like a lidar data set. You can ingest it into your existing lidar data sets.” The Urban Robotics point cloud also has the advantage, he said, of already having RGB values associated with it, so you don’t have to do that co-registration with an RGB sensor.

However, instead of being a grid of points, like lidar, the Urban Robotics point cloud is more organic. You get points where you get pixels. “It’s mostly a matter of how much coverage do you have of that area,” said Peters. “We’ll do a dense one off of video, where it’s being taken from different angles, and you’ll get a really nice cloud even from side and oblique angles. Whereas if you’re just using images from above in the area, then you get points where you get overlapping view points from different angles.”

Because while lidar sends an active pulse and watches the response, Urban Robotics’ algorithms work with the pulse from the sun or ambient light and measure that angle instead. The company needs at least two data points, preferably three. Like lidar, the point cloud can be georeferenced with the use of GPS and IMUs, and the company even has experience georeferencing without the use of IMUs.

Peters said, though, that he doesn’t see his company as going head to head with lidar: “We look at it as more that there are many applications where lidar isn’t cost effective, and having simple imaging devices is.”

What about accuracy? Peters said accuracy can depend on the properties of the collection device and the company has worked hard to be sensor agnostic. Because Urban Robotics algorithms deal with every pixel in the image, its accuracy is “better than other extraction that’s out there, because we’re so dense,” but Peters wouldn’t go so far as to say accuracy is apples to apples with lidar – yet.

“I would say the technology is still young and so still forming and that it has some room to grow,” he said.

Currently, the company is looking for partners to explore the commercial marketplace with a new firm the company is launching, Urban Robotics Commercial Ventures, which will be headed by David Boardman.

Boardman said it was too early to talk about what the channel to market would be, but said, “we’re looking for real-world, large-scale problems to solve, where we can make sure we can solve the problem and make sure it’s a valuable problem to solve. We’re looking for companies where we can see if it makes sense to pursue a commercial opportunity together.”