For autonomous vehicle sensing and perception, “seeing” is not the same as understanding. An object detected by sensors could be a statue, a person, a tree, or another car. Most autonomous vehicle perception systems rely on measurements of size, positioning, and velocity to help give clues to an object’s identity. Some solutions leverage machine learning to some degree, but there are limitations as to what you can teach a system to do, especially when it is trying to make correct decisions in an ever-changing, busy environment like a public roadway.

At September’s Autosens in Brussels (an expo and conference focusing on automotive sensor and perception technology), a new “3D Semantic Camera” was launched that could fundamentally change the perception and comprehension of autonomous driving systems. The camera uses hyperspectral analysis and SLAM technology in a single device to identify surrounding objects and analyze road conditions.

The makers of the camera, Outsight, is a new entity formed from former Velodyne partner Dibotics, who made their name in smart machine perception and real-time processing solutions for 3D data.

One of the primary concerns in autonomous vehicles, especially those aimed for consumer use, is safety – for both the driver and passengers within a car as well as the pedestrians outside of it. For CEO and co-founder of Outsight Cedric Hutchings, it is this safety concern that has driven the development of their products.

“In the US alone, approximately 4 million people are seriously injured by car accidents every year. At Outsight, we believe in building safer mobility by making vehicles much smarter.”

Outsight’s sensor combines software and hardware to create sensor innovations such as remote material identification with comprehensive real-time 3D data processing. This technology provides an unprecedented and cost-efficient ability for systems to perceive, understand, and ultimately interact with their surroundings in real-time.

Outsight’s sensor combines software and hardware to create sensor innovations such as remote material identification with comprehensive real-time 3D data processing. This technology provides an unprecedented and cost-efficient ability for systems to perceive, understand, and ultimately interact with their surroundings in real-time.

“We are excited to unveil our 3D Semantic Camera that brings an unprecedented solution for a vehicle to detect road hazards and prevent accidents.” Hutchings

The technology seems revolutionary, especially for something packed in a single device. It can provide “Full Situation Awareness” in a single device, with the ability to perceive and comprehend the environment. It can even tell the difference between skin and fabric, ice and snow, metal and wood.

The primary concern for automakers is the ability to avoid pedestrians and cyclists, which this analysis would be able to distinguish due to their material makeup (e.g., the metal in a bicycle reads differently than a person walking alone). This ability to distinguish materials can also help in assessing road conditions, being able to spot hazards like black ice that may look like water to a human driver.

The device accomplishes this with a low powered, long-range shortwave infrared (SWIR) laser that identifies material composition that can be identified through active hyperspectral analysis. The SWIR laser can scan farther than traditional lidar systems, extending the view (and thus, the reaction time) of autonomous systems. Combined with its 3D “SLAM on Chip” capability, these sensors can look at the world in real-time, something that Hutchinson describes as an “unprecedented solution” for the detection of road hazards.

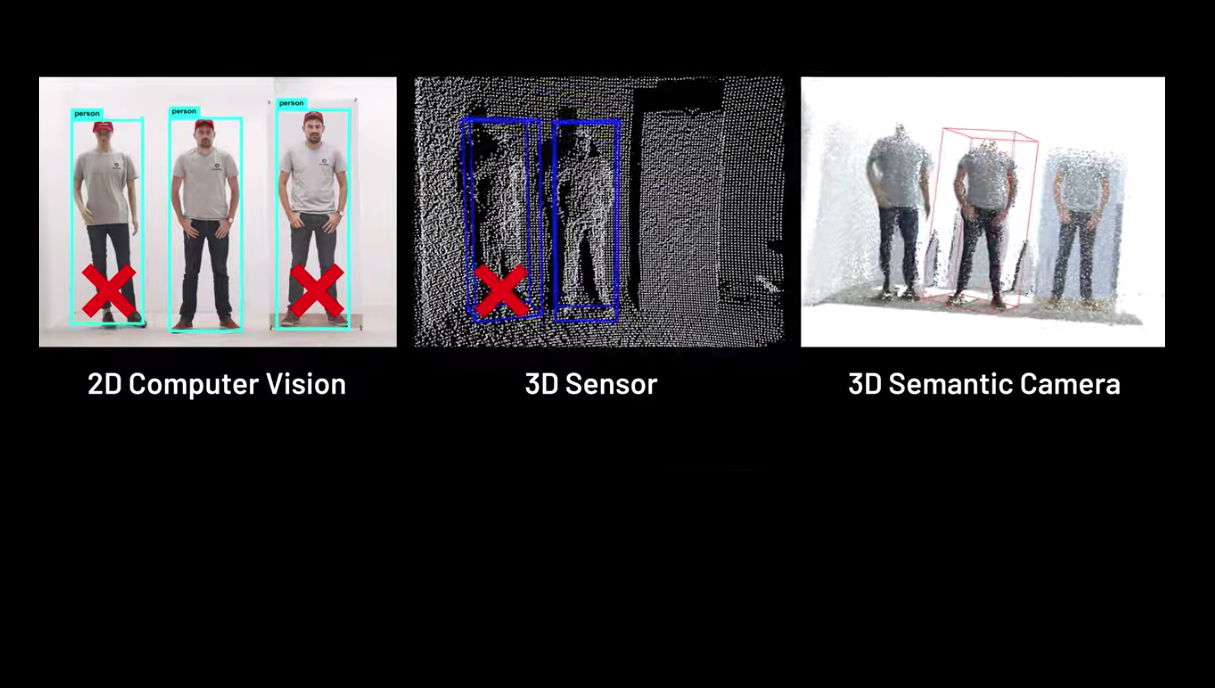

In a demonstration video, Outsense’s camera is able to distinguish between a person, a mannequin, and a photo of a person better than 2D camera and traditional scanning systems.

Additionally, unlike other systems, this solution can provide object identities and actionable information without the use of machine learning. This stand-alone capability means that there it uses less processing, less energy, and operates at a lower bandwidth.

Developers do not need to rely on massive datasets to “train” the system, lessening the potential for errors. The system’s interpretations are cross-checked against the object’s material to add another level of confidence. This may provide a much fuller understanding of the surrounding environment and any potential hazards.

The camera provides the position, size, and the full velocity of all moving objects in its surroundings. All of this data combined provides valuable information for route planning and decision making in an autonomous system, such as an ADAS (Advanced Driver Assistance System). Other potential applications include construction and mining equipment, helicopters, drones, and the ultimate goal – completely self-driving cars.

Outsight is working with OEMs and Tier1 providers in the automotive, aeronautics and security markets, and plans to open the technology up to other partners in the first quarter of 2020. Raul Bravo, President and Co-founder of Outsight, envisions even more applications for the new camera.

“With being able to unveil the full reality of the world by providing information that was previously invisible, we at Outsight are convinced that a whole new world of applications will be unleashed. This is just the beginning.”